Technical SEO Services in Bangladesh

Our technical SEO services offer a comprehensive in-depth audit and resolution of all technical issues affecting your website. We meticulously analyze various aspects such as site structure, link structure, content silos, click depth, and any crawling or indexing challenges. By addressing these areas, we optimize your website to perform better than your competitors in organic search results. We leverage every possible technical advantage, including mobile-friendliness and schema markup, to ensure your website achieves the best possible ranking.

What is Technical SEO and Why is it Important?

Technical SEO involves optimizing websites and servers to make them easier for search engines to crawl and index. To ensure a seamless online experience, it’s essential to audit your website’s SEO to identify areas for improvement. This audit will help you ensure that your site possesses the technical attributes that search engines favor, such as secure connections, responsive designs, and fast loading times.

Search engines like Google are dedicated to delivering the most relevant results to users. When Google’s robots crawl and evaluate web pages, they consider various factors, including those that impact the user experience, like page load speed. Additionally, search engine robots analyze your pages based on other factors, such as structured data, which plays a key role in how your site is understood and ranked.

Improving your site’s technical aspects allows search engines to crawl and comprehend it more effectively, potentially leading to higher rankings and even rich results. However, technical SEO is often the most complex and challenging part of the overall SEO strategy. A technical SEO services checklist typically includes various elements that help assess and optimize a website’s content and structure, ultimately increasing visibility for more relevant keywords through well-organized content silos and optimized websites.

If your site isn’t technically sound, search engines may not be able to access it at all, severely limiting your chances of ranking. Dynamic websites that use JavaScript frameworks, while beneficial in some respects, often lack basic SEO features. However, these issues can be addressed with the use of JavaScript libraries and plugins. For this reason, it may be wise to hire an SEO expert as a consultant.

Technical SEO Services:

Optimize your website’s performance with expert technical SEO services. Improve crawlability, speed, and rankings. Get a free consultation today!

First things to look for when it comes to technical SEO services

1. Ensure the Website is Using HTTPS

What is this? HTTPS is a secure version of HTTP, indicating that the data between the website and your visitors is encrypted.

Why Important? Google has confirmed HTTPS as a ranking signal. It enhances user trust and security, which is vital for e-commerce sites and all websites collecting user data.

2. Improve Site Speed

What is this? Site speed refers to how quickly a website loads for a user.

Why Important? Site speed is a ranking factor for both desktop and mobile searches. Faster sites provide a better user experience, leading to higher engagement rates and lower bounce rates.

3. Mobile-Friendly Website

What is this? A mobile-friendly website is one that correctly displays on mobile and handheld devices.

Why Important? Google operates on a mobile-first indexing basis, meaning it predominantly uses the mobile version of the content for indexing and ranking.

4. Create an XML Sitemap

What is this? An XML sitemap is a file that helps search engines better understand your website while crawling it. It lists a website’s important pages, making sure Google can find and crawl them all.

Why Important? Helps ensure search engines can discover and index all important pages on your site, especially if your site has a large number of pages or lacks a strong internal linking structure.

5. Implement Structured Data Markup

What is this? Structured data markup is code you add to your website to help search engines return more informative results for users.

Why Important? It can enhance search visibility through rich snippets, potentially increasing click-through rates.

6. Check for Duplicate Content

What is this? Duplicate content refers to substantial blocks of content within or across domains that either completely match other content or are appreciably similar.

Why Important? It can confuse search engines and force them to choose which version of the content is most relevant. This can dilute link equity and impact rankings.

7. Ensure Clean and Structured URL Architecture

What is this? URL structure refers to the layout of your URLs. Ideally, they should be simple, understandable, and keyword-rich.

Why Important? A clean URL structure improves user experience and helps search engines understand the relevance of a page.

8. Optimize Robots.txt and Use Meta Robots Tags Properly

What is this? Robots.txt is a file at the root of your site that indicates those parts of your site you don’t want accessed by search engine crawlers. Meta robot tags are snippets of code that provide crawlers instructions for how to crawl or index web page content.

Why Important? They help control crawler access to certain areas of your site and prevent the indexing of sensitive or duplicate content.

9. Check for 404 Errors and Redirects

What is this?

404 errors occur when a user tries to reach a non-existent page on your site. Redirects (like 301 redirects) forward users from one URL to another.

Why Important? Too many 404 errors can harm user experience and potentially affect your site’s ranking. Proper use of redirects helps maintain link equity and guide users and search engines to the desired content.

10. Optimize Site Architecture and Internal Linking

What is this? Site architecture refers to how your website’s pages are structured and linked together. An optimized internal linking strategy ensures that important pages receive more link equity.

Why Important? Good site architecture and internal linking help search engines understand the hierarchy and importance of pages on your site, aiding in indexing and user navigation.

11. Secure Your Website from Hacking and Spam

What is this? Implement security measures to protect your site from malicious attacks and spam.

Why Important? Security breaches can lead to site blacklisting by search engines, loss of user trust, and significant impacts on SEO performance.

12. Optimize Page Experience Signals

What is this? Page experience signals include Core Web Vitals and other factors like mobile-friendliness, safe browsing, HTTPS, and intrusive interstitial guidelines.

Why Important? Google uses page experience signals in its ranking criteria to measure how users perceive the experience of interacting with a web page beyond its informational value.

1. Crawl ability: Crawlability is the ability of a search engine to access a web page and crawl its content. (Google)

Crawlability Checklist: ✔Create an XML Sitemap, ✔Optimize Site Architecture, ✔Set Url Structures, ✔Utilize Robots.txt, ✔Add Breadcrumbs Menu

2. Indexibility: When Google’s crawler is satisfied with my website by crawling then Google will index that website. Google will store my website in its database.

Indexability Checklist: ✔Unblock Google Robots From Accessing the Page, ✔Remove Duplicate Content, ✔Audit Your Site Speed, ✔Make Your Site Mobile Friendly, ✔Fix Broken Links.

3. Accebility: If crawling + indexing is perfect then my website will get accebility to users. And Users can visit this website. And can spend time.

4. Rank ability: When users are engaged on the website more, then my website will rank. And will show up in Google SERP.

Rankability Checklist: ✔crawl ability, ✔indexability, and other SEO functions are to be perfect.

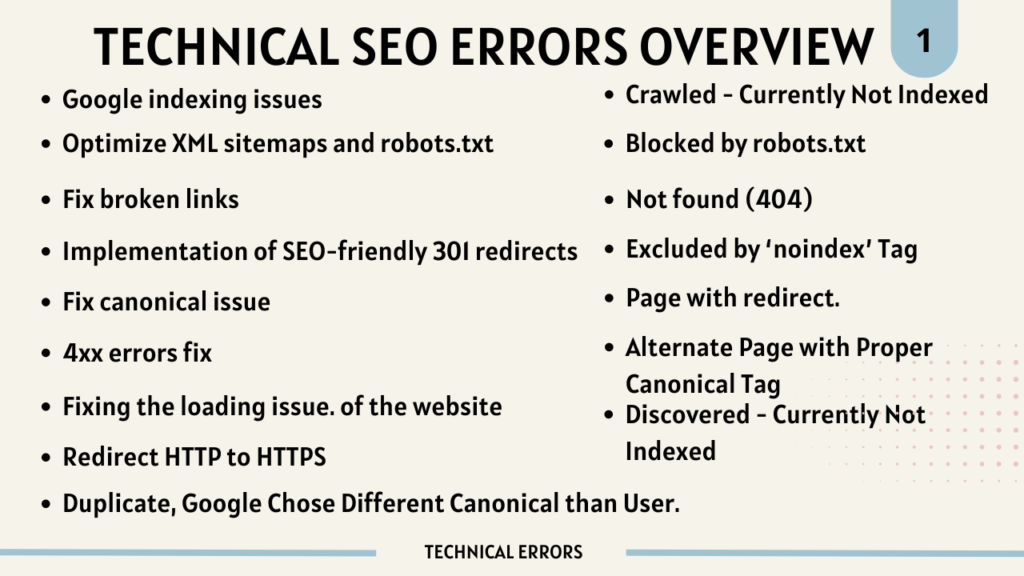

Technical seo errors Google Search Console Brief and Solutions

Technical SEO errors in Google Search Console include issues like broken links, slow page speed, and mobile usability errors. Solutions involve fixing broken links, optimizing images, and ensuring a responsive design to improve user experience and search rankings.

We will see the errors later, GSC+pages+all known page+ sitemaps xml (these are the ones we will solve the problem later) sitemaps xml and sitemaps_index_xml contain the real errors.

1. Page with Redirect

Definition: This error occurs when a page is redirected to a different page, which might confuse search engines about which page to index.

Solution:

if the redirect is necessary. If it is, make sure it’s a 301 redirect (permanent) rather than a 302 redirect (temporary). For WordPress, you can manage redirects using plugins like “Redirection”.

2. Excluded by ‘noindex’ Tag

Definition: A ‘noindex’ tag instructs search engines not to index a page. This tag can be added either manually to the HTML or through WordPress settings/plugins.

Solution: Check if the ‘noindex’ tag is intentionally placed. If you wish to have these pages indexed, remove the tag. This can be done by editing the page’s HTML, or by using SEO plugins like Yoast SEO where you can control index settings.

3. Not Found (404),

Definition: This indicates that a page is not found (404 error). Pages might have been deleted or the URL could be incorrect.

Solution: Ensure all links on your website are correct. For deleted pages, consider creating redirects to relevant pages. WordPress plugins like “Redirection” can help manage this.

4. Blocked by robots.txt,

Definition: This error means a page is being blocked from being crawled by the robots.txt file, which tells search engines which parts of your site they can and

can’t crawl.

Solution: Review your robots.txt file and adjust it to allow search engines to crawl these pages. This file can be edited directly from the root directory of your WordPress site.

5. Alternate Page with Proper Canonical Tag,

Definition: Indicates that an alternate page has been specified as the canonical version, suggesting to search engines which version of a page to prioritize.

Solution: Ensure the canonical tag is correctly implemented, particularly if you have similar or duplicate content. This can be managed through WordPress SEO plugins like Yoast SEO.

6. Crawled – Currently Not Indexed,

Definition: The page was crawled by Google, but not indexed. This could be due to low content quality or other issues.

Solution: Improve the page’s content quality, making sure it provides value and is unique. Also, ensure the page is well-linked within your site to increase its importance.

7. Discovered – Currently Not Indexed,

Definition: Google is aware of the page but hasn’t crawled it yet, possibly due to crawl budget constraints or low site priority.

Solution: Improve the site’s overall SEO and ensure there are no technical barriers to crawling. Enhancing the site’s internal linking structure can also help.

8. Duplicate, Google Chose Different Canonical than User,

Definition: This means Google has detected duplicate content and chosen a different canonical page than the one you suggested.

Solution: Review your canonical tags to ensure they are correctly implemented. Make sure the content on the canonical page is the most comprehensive and useful version. Plugins like Yoast SEO can help manage these tags.